Our recent Conclave on AI Governance witnessed the launch of eight key initiatives and reports by the CeRAI team. These initiatives were launched by IITM Director, V Kamakoti, alongside Prof. Balaraman Ravindran, Head, Wadhwani School of Data Science and AI, and Centre for Responsible AI.

The Algorithmic–Human Manager: AI, Apps, and Workers in the Indian Gig Economy

Authored by Omir Kumar, Policy Analyst, CeRAI, IIT Madras, and Krishnan Narayanan, Researcher, CeRAI, IIT Madras, Co-founder and President, itihaasa Research and Digital, this report explores how algorithms and human oversight jointly manage gig workers — from delivery riders to platform drivers.Scan QR for Report

The study examines the lived experiences of workers, their negotiations with app-based management, and what responsible AI governance should look like for the future of digital labour.

AI Incident Reporting Framework for India

Authored by Dr. Geetha Raju, and Prof. Balaraman Ravindran, Head, Wadhwani School of Data Science and AI and Centre for Responsible AI, this discussion paper offers a detailed blueprint for managing AI incidents within India’s unique AI ecosystem.Scan QR for Report

Policy Chatbot

By Omir Kumar, Policy Analyst, CeRAI and Sudarsun Santhiappam, Research Lead, CeRAI, PolicyBot is an open source AI chatbot system designed to make complex policy documents accessible for non-experts.Scan QR for Source

AI Evaluation Tool v0.2.0 For Conversation Agents

By Sudarsun Santhiappan, Research Lead, CeRAI, and his team, that goal was to design an automated evaluation platform for testing conversational AI systems that integrates structured test plans, metrics, and strategies, enabling standardized model & system assessment across tasks, domains, and languages. AI Evaluation Tool has 41 strategies, 48 evaluation metrics and 7 Test plans with over 400 test cases.Scan QR for Source

IndiBias-based Contextually Aligned Stereotypes and Anti-Stereotypes (IndiCASA) Dataset

Authored by Santhosh G S, Postbacc Fellow, Akshay Govind, Undergrad Student, Dr. Gokul S Krishnan, Senior Research Scientist, CeRAI, Prof. Balaraman Ravindran, Head, WSAI & CeRAI, and Prof. Sriraam Natarajan, The University of Texas, Dallas, and Associate Faculty Fellow, WSAI, IndiCASA can help better Bias (stereotype) detection and evaluation in the Indian context.Scan QR for Dataset

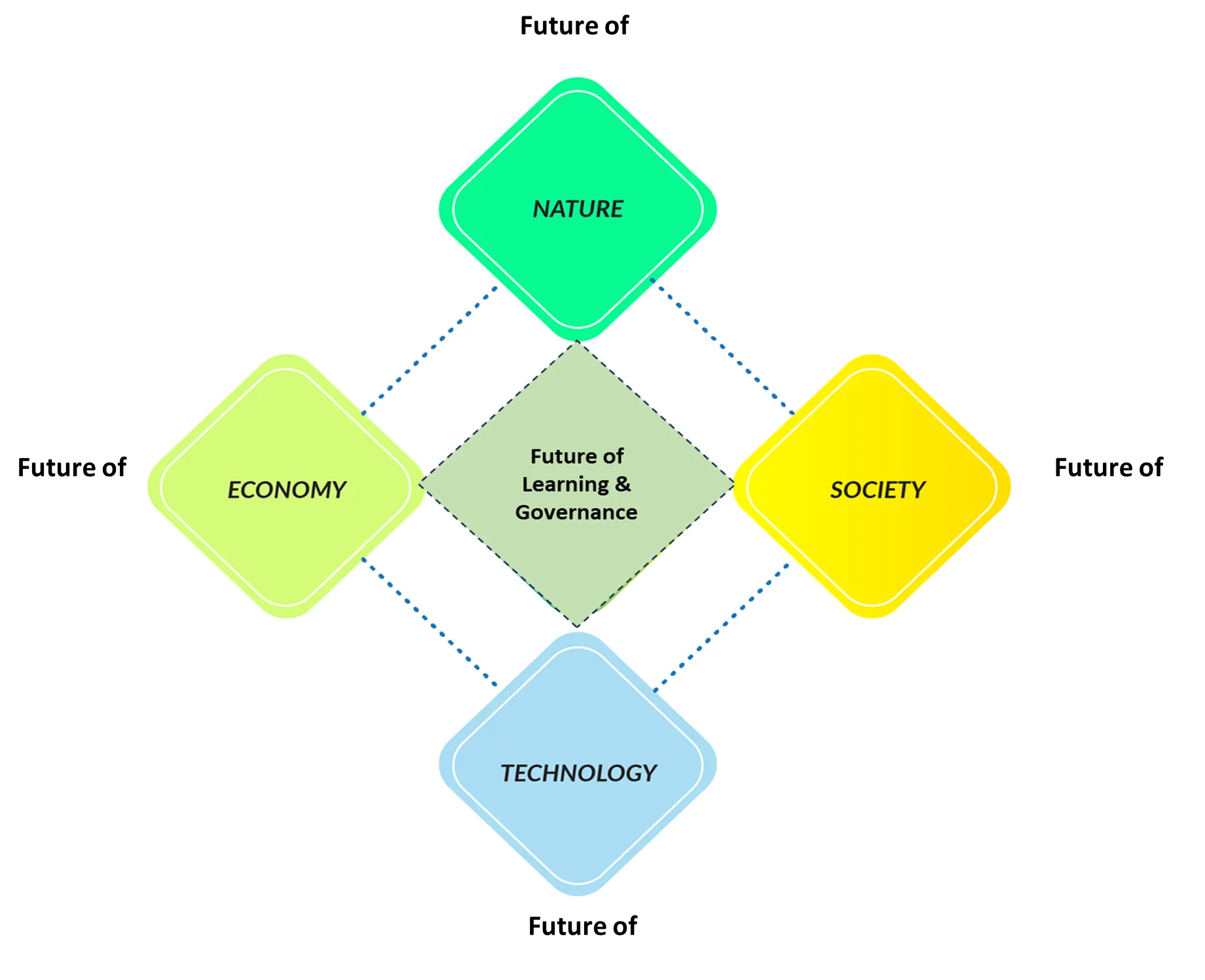

The Co-Intelligence (COIN) Network

A global network of individuals and organisations dedicated to the pursuit of leveraging “co-intelligence” to benefit enterprises and society.